|

|

@@ -549,18 +549,68 @@ The implementation does not include a network protocol to support the daemon

|

|

|

setup as described in section \ref{sec:daemon}. Therefore, it is only usable in

|

|

|

Python programs. Thus, the two test programs are also written in Python.

|

|

|

|

|

|

-\section{Full screen Pygame program}

|

|

|

+The area implementations contain some geometric functions to determine whether

|

|

|

+an event should be delegated to an area. All gesture trackers have been

|

|

|

+implemented using an imperative programming style. Technical details about the

|

|

|

+implementation of gesture detection are described in appendix

|

|

|

+\ref{app:implementation-details}.

|

|

|

|

|

|

-% TODO

|

|

|

+\section{Full screen Pygame program}

|

|

|

|

|

|

-The first test application was initially implemented without application of the

|

|

|

-architecture design. This application was a Python port of a

|

|

|

-Processing\footnote{} program found on the internet, with some adaptations to

|

|

|

-work with the TUIO protocol.

|

|

|

+%The goal of this program was to experiment with the TUIO

|

|

|

+%protocol, and to discover requirements for the architecture that was to be

|

|

|

+%designed. When the architecture design was completed, the program was rewritten

|

|

|

+%using the new architecture components. The original variant is still available

|

|

|

+%in the ``experimental'' folder of the Git repository \cite{gitrepos}.

|

|

|

+

|

|

|

+An implementation of the detection of some simple multi-touch gestures (single

|

|

|

+tap, double tap, rotation, pinch and drag) using Processing\footnote{Processing

|

|

|

+is a Java-based programming environment with an export possibility for Android.

|

|

|

+See also \cite{processing}.} can be found in a forum on the Processing website

|

|

|

+\cite{processingMT}. The program has been ported to Python and adapted to

|

|

|

+receive input from the TUIO protocol. The implementation is fairly simple, but

|

|

|

+it yields some appealing results (see figure \ref{fig:draw}). In the original

|

|

|

+program, the detection logic of all gestures is combined in a single class

|

|

|

+file. As predicted by the GART article \cite{GART}, this leads to over-complex

|

|

|

+code that is difficult to read and debug.

|

|

|

+

|

|

|

+The application has been rewritten using the reference implementation of the

|

|

|

+architecture. The detection code is separated into two different gesture

|

|

|

+trackers, which are the ``tap'' and ``transformation'' trackers mentioned in

|

|

|

+section \ref{sec:implementation}.

|

|

|

+

|

|

|

+The application receives TUIO events and translates them to \emph{point\_down},

|

|

|

+\emph{point\_move} and \emph{point\_up} events. These events are then

|

|

|

+interpreted to be \emph{single tap}, \emph{double tap}, \emph{rotation} or

|

|

|

+\emph{pinch} gestures. The positions of all touch objects are drawn using the

|

|

|

+Pygame library. Since the Pygame library does not provide support to find the

|

|

|

+location of the display window, the root area captures events in the entire

|

|

|

+screens surface. The application can be run either full screen or in windowed

|

|

|

+mode. If windowed, screen-wide gesture coordinates are mapped to the size of

|

|

|

+the Pyame window. In other words, the Pygame window always represents the

|

|

|

+entire touch surface. The output of the program can be seen in figure

|

|

|

+\ref{fig:draw}.

|

|

|

+

|

|

|

+\begin{figure}[h!]

|

|

|

+ \center

|

|

|

+ \includegraphics[scale=0.4]{data/pygame_draw.png}

|

|

|

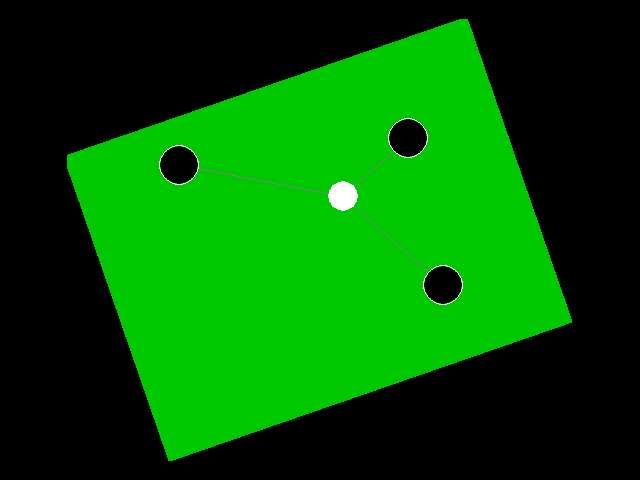

+ \caption{Output of the experimental drawing program. It draws all touch

|

|

|

+ points and their centroid on the screen (the centroid is used for rotation

|

|

|

+ and pinch detection). It also draws a green rectangle which

|

|

|

+ \label{fig:draw}

|

|

|

+responds to rotation and pinch events.}

|

|

|

+\end{figure}

|

|

|

|

|

|

\section{GTK/Cairo program}

|

|

|

|

|

|

+The second test application uses the GIMP toolkit (GTK+) \cite{GTK} to create

|

|

|

+its user interface. Since GTK+ defines a main event loop that is started in

|

|

|

+order to use the interface, the architecture implementation runs in a separate

|

|

|

+thread. The application creates a main window, whose size and position are

|

|

|

+synchronized with the root area of the architecture.

|

|

|

+

|

|

|

% TODO

|

|

|

+\emph{TODO: uitbreiden en screenshots erbij (dit programma is nog niet af)}

|

|

|

|

|

|

\chapter{Conclusions}

|

|

|

|

|

|

@@ -640,73 +690,20 @@ client application, as stated by the online specification

|

|

|

values back to the actual screen dimension.

|

|

|

\end{quote}

|

|

|

|

|

|

-\chapter{Experimental program}

|

|

|

-\label{app:experiment}

|

|

|

-

|

|

|

- % TODO: This is not really 'related', move it to somewhere else

|

|

|

- \section{Processing implementation of simple gestures in Android}

|

|

|

-

|

|

|

- An implementation of a detection architecture for some simple multi-touch

|

|

|

- gestures (tap, double tap, rotation, pinch and drag) using

|

|

|

- Processing\footnote{Processing is a Java-based development environment with

|

|

|

- an export possibility for Android. See also \url{http://processing.org/.}}

|

|

|

- can be found in a forum on the Processing website \cite{processingMT}. The

|

|

|

- implementation is fairly simple, but it yields some very appealing results.

|

|

|

- The detection logic of all gestures is combined in a single class. This

|

|

|

- does not allow for extendability, because the complexity of this class

|

|

|

- would increase to an undesirable level (as predicted by the GART article

|

|

|

- \cite{GART}). However, the detection logic itself is partially re-used in

|

|

|

- the reference implementation of the generic gesture detection architecture.

|

|

|

-

|

|

|

-% TODO: rewrite intro

|

|

|

-When designing a software library, its API should be understandable and easy to

|

|

|

-use for programmers. To find out the basic requirements of the API to be

|

|

|

-usable, an experimental program has been written based on the Processing code

|

|

|

-from \cite{processingMT}. The program receives TUIO events and translates them

|

|

|

-to point \emph{down}, \emph{move} and \emph{up} events. These events are then

|

|

|

-interpreted to be (double or single) \emph{tap}, \emph{rotation} or

|

|

|

-\emph{pinch} gestures. A simple drawing program then draws the current state to

|

|

|

-the screen using the PyGame library. The output of the program can be seen in

|

|

|

-figure \ref{fig:draw}.

|

|

|

-

|

|

|

-\begin{figure}[H]

|

|

|

- \center

|

|

|

- \label{fig:draw}

|

|

|

- \includegraphics[scale=0.4]{data/experimental_draw.png}

|

|

|

- \caption{Output of the experimental drawing program. It draws the touch

|

|

|

- points and their centroid on the screen (the centroid is used as center

|

|

|

- point for rotation and pinch detection). It also draws a green

|

|

|

- rectangle which responds to rotation and pinch events.}

|

|

|

-\end{figure}

|

|

|

-

|

|

|

-One of the first observations is the fact that TUIO's \texttt{SET} messages use

|

|

|

-the TUIO coordinate system, as described in appendix \ref{app:tuio}. The test

|

|

|

-program multiplies these with its own dimensions, thus showing the entire

|

|

|

-screen in its window. Also, the implementation only works using the TUIO

|

|

|

-protocol. Other drivers are not supported.

|

|

|

-

|

|

|

-Though using relatively simple math, the rotation and pinch events work

|

|

|

-surprisingly well. Both rotation and pinch use the centroid of all touch

|

|

|

-points. A \emph{rotation} gesture uses the difference in angle relative to the

|

|

|

-centroid of all touch points, and \emph{pinch} uses the difference in distance.

|

|

|

-Both values are normalized using division by the number of touch points. A

|

|

|

-pinch event contains a scale factor, and therefore uses a division of the

|

|

|

-current by the previous average distance to the centroid.

|

|

|

-

|

|

|

-There is a flaw in this implementation. Since the centroid is calculated using

|

|

|

-all current touch points, there cannot be two or more rotation or pinch

|

|

|

-gestures simultaneously. On a large multi-touch table, it is desirable to

|

|

|

-support interaction with multiple hands, or multiple persons, at the same time.

|

|

|

-This kind of application-specific requirements should be defined in the

|

|

|

-application itself, whereas the experimental implementation defines detection

|

|

|

-algorithms based on its test program.

|

|

|

-

|

|

|

-Also, the different detection algorithms are all implemented in the same file,

|

|

|

-making it complex to read or debug, and difficult to extend.

|

|

|

-

|

|

|

\chapter{Diagram demonstrating event propagation}

|

|

|

\label{app:eventpropagation}

|

|

|

|

|

|

\eventpropagationfigure

|

|

|

|

|

|

+\chapter{Gesture detection in the reference implementation}

|

|

|

+\label{app:implementation-details}

|

|

|

+

|

|

|

+% TODO

|

|

|

+Both rotation and pinch use the centroid of all touch points. A \emph{rotation}

|

|

|

+gesture uses the difference in angle relative to the centroid of all touch

|

|

|

+points, and \emph{pinch} uses the difference in distance. Both values are

|

|

|

+normalized using division by the number of touch points. A pinch event contains

|

|

|

+a scale factor, and therefore uses a division of the current by the previous

|

|

|

+average distance to the centroid.

|

|

|

+

|

|

|

\end{document}

|