|

|

@@ -271,7 +271,7 @@ detection for every new gesture-based application.

|

|

|

therefore generate events that simply identify the screen location at which

|

|

|

an event takes place. User interfaces of applications that do not run in

|

|

|

full screen modus are contained in a window. Events which occur outside the

|

|

|

- application window should not be handled by the program in most cases.

|

|

|

+ application window should not be handled by the application in most cases.

|

|

|

What's more, widget within the application window itself should be able to

|

|

|

respond to different gestures. E.g. a button widget may respond to a

|

|

|

``tap'' gesture to be activated, whereas the application window responds to

|

|

|

@@ -561,8 +561,9 @@ start the GUI main loop in the current thread

|

|

|

A reference implementation of the design has been written in Python. Two test

|

|

|

applications have been created to test if the design ``works'' in a practical

|

|

|

application, and to detect its flaws. One application is mainly used to test

|

|

|

-the gesture tracker implementations. The other program uses multiple event

|

|

|

-areas in a tree structure, demonstrating event delegation and propagation.

|

|

|

+the gesture tracker implementations. The other application uses multiple event

|

|

|

+areas in a tree structure, demonstrating event delegation and propagation. Teh

|

|

|

+second application also defines a custom gesture tracker.

|

|

|

|

|

|

To test multi-touch interaction properly, a multi-touch device is required. The

|

|

|

University of Amsterdam (UvA) has provided access to a multi-touch table from

|

|

|

@@ -585,14 +586,6 @@ The reference implementation is written in Python and available at

|

|

|

$(x, y)$ position.

|

|

|

\end{itemize}

|

|

|

|

|

|

-\textbf{Gesture trackers}

|

|

|

-\begin{itemize}

|

|

|

- \item Basic tracker, supports $point\_down,~point\_move,~point\_up$ gestures.

|

|

|

- \item Tap tracker, supports $tap,~single\_tap,~double\_tap$ gestures.

|

|

|

- \item Transformation tracker, supports $rotate,~pinch,~drag$ gestures.

|

|

|

- \item Hand tracker, supports $hand\_down,~hand\_up$ gestures.

|

|

|

-\end{itemize}

|

|

|

-

|

|

|

\textbf{Event areas}

|

|

|

\begin{itemize}

|

|

|

\item Circular area

|

|

|

@@ -601,6 +594,13 @@ The reference implementation is written in Python and available at

|

|

|

\item Full screen area

|

|

|

\end{itemize}

|

|

|

|

|

|

+\textbf{Gesture trackers}

|

|

|

+\begin{itemize}

|

|

|

+ \item Basic tracker, supports $point\_down,~point\_move,~point\_up$ gestures.

|

|

|

+ \item Tap tracker, supports $tap,~single\_tap,~double\_tap$ gestures.

|

|

|

+ \item Transformation tracker, supports $rotate,~pinch,~drag,~flick$ gestures.

|

|

|

+\end{itemize}

|

|

|

+

|

|

|

The implementation does not include a network protocol to support the daemon

|

|

|

setup as described in section \ref{sec:daemon}. Therefore, it is only usable in

|

|

|

Python programs. The two test programs are also written in Python.

|

|

|

@@ -611,11 +611,11 @@ have been implemented using an imperative programming style. Technical details

|

|

|

about the implementation of gesture detection are described in appendix

|

|

|

\ref{app:implementation-details}.

|

|

|

|

|

|

-\section{Full screen Pygame program}

|

|

|

+\section{Full screen Pygame application}

|

|

|

|

|

|

-%The goal of this program was to experiment with the TUIO

|

|

|

+%The goal of this application was to experiment with the TUIO

|

|

|

%protocol, and to discover requirements for the architecture that was to be

|

|

|

-%designed. When the architecture design was completed, the program was rewritten

|

|

|

+%designed. When the architecture design was completed, the application was rewritten

|

|

|

%using the new architecture components. The original variant is still available

|

|

|

%in the ``experimental'' folder of the Git repository \cite{gitrepos}.

|

|

|

|

|

|

@@ -623,10 +623,10 @@ An implementation of the detection of some simple multi-touch gestures (single

|

|

|

tap, double tap, rotation, pinch and drag) using Processing\footnote{Processing

|

|

|

is a Java-based programming environment with an export possibility for Android.

|

|

|

See also \cite{processing}.} can be found in a forum on the Processing website

|

|

|

-\cite{processingMT}. The program has been ported to Python and adapted to

|

|

|

+\cite{processingMT}. The application has been ported to Python and adapted to

|

|

|

receive input from the TUIO protocol. The implementation is fairly simple, but

|

|

|

it yields some appealing results (see figure \ref{fig:draw}). In the original

|

|

|

-program, the detection logic of all gestures is combined in a single class

|

|

|

+application, the detection logic of all gestures is combined in a single class

|

|

|

file. As predicted by the GART article \cite{GART}, this leads to over-complex

|

|

|

code that is difficult to read and debug.

|

|

|

|

|

|

@@ -635,16 +635,13 @@ architecture. The detection code is separated into two different gesture

|

|

|

trackers, which are the ``tap'' and ``transformation'' trackers mentioned in

|

|

|

section \ref{sec:implementation}.

|

|

|

|

|

|

-The application receives TUIO events and translates them to \emph{point\_down},

|

|

|

-\emph{point\_move} and \emph{point\_up} events. These events are then

|

|

|

-interpreted to be \emph{single tap}, \emph{double tap}, \emph{rotation} or

|

|

|

-\emph{pinch} gestures. The positions of all touch objects are drawn using the

|

|

|

+The positions of all touch objects and their centroid are drawn using the

|

|

|

Pygame library. Since the Pygame library does not provide support to find the

|

|

|

location of the display window, the root event area captures events in the

|

|

|

entire screens surface. The application can be run either full screen or in

|

|

|

windowed mode. If windowed, screen-wide gesture coordinates are mapped to the

|

|

|

size of the Pyame window. In other words, the Pygame window always represents

|

|

|

-the entire touch surface. The output of the program can be seen in figure

|

|

|

+the entire touch surface. The output of the application can be seen in figure

|

|

|

\ref{fig:draw}.

|

|

|

|

|

|

\begin{figure}[h!]

|

|

|

@@ -652,26 +649,70 @@ the entire touch surface. The output of the program can be seen in figure

|

|

|

\includegraphics[scale=0.4]{data/pygame_draw.png}

|

|

|

\caption{Output of the experimental drawing program. It draws all touch

|

|

|

points and their centroid on the screen (the centroid is used for rotation

|

|

|

- and pinch detection). It also draws a green rectangle which

|

|

|

+ and pinch detection). It also draws a green rectangle which responds to

|

|

|

+ rotation and pinch events.}

|

|

|

\label{fig:draw}

|

|

|

-responds to rotation and pinch events.}

|

|

|

\end{figure}

|

|

|

|

|

|

-\section{GTK/Cairo program}

|

|

|

+\section{GTK+/Cairo application}

|

|

|

|

|

|

The second test application uses the GIMP toolkit (GTK+) \cite{GTK} to create

|

|

|

its user interface. Since GTK+ defines a main event loop that is started in

|

|

|

order to use the interface, the architecture implementation runs in a separate

|

|

|

-thread. The application creates a main window, whose size and position are

|

|

|

-synchronized with the root event area of the architecture.

|

|

|

-

|

|

|

-% TODO

|

|

|

-\emph{TODO: uitbreiden en screenshots erbij (dit programma is nog niet af)}

|

|

|

+thread.

|

|

|

+

|

|

|

+The application creates a main window, whose size and position are synchronized

|

|

|

+with the root event area of the architecture. The synchronization is handled

|

|

|

+automatically by a \texttt{GtkEventWindow} object, which is a subclass of

|

|

|

+\texttt{gtk.Window}. This object serves as a layer that connects the event area

|

|

|

+functionality of the architecture to GTK+ windows.

|

|

|

+

|

|

|

+The main window contains a number of polygons which can be dragged, resized and

|

|

|

+rotated. Each polygon is represented by another event area to allow

|

|

|

+simultaneous interaction with different polygons. The main window also responds

|

|

|

+to transformation, by transforming all polygons. Additionally, double tapping

|

|

|

+on a polygon changes its color.

|

|

|

+

|

|

|

+An ``overlay'' event area is used to detect all fingers currently touching the

|

|

|

+screen. The application defines a custom gesture tracker, called the ``hand

|

|

|

+tracker'', which is used by the overlay. The hand tracker uses distances

|

|

|

+between detected fingers to detect which fingers belong to the same hand. The

|

|

|

+application draws a line from each finger to the hand it belongs to, as visible

|

|

|

+in figure \ref{fig:testapp}.

|

|

|

|

|

|

-\section{Discussion}

|

|

|

+\begin{figure}[h!]

|

|

|

+ \center

|

|

|

+ \includegraphics[scale=0.35]{data/testapp.png}

|

|

|

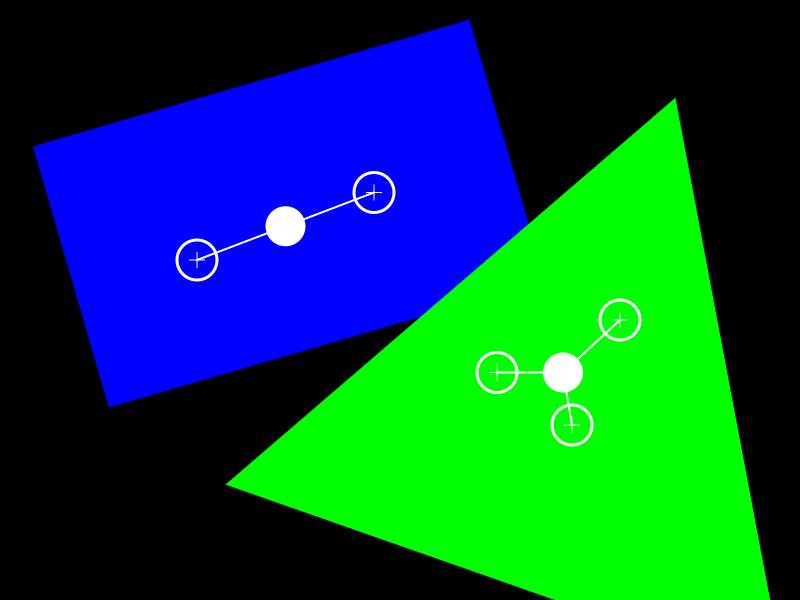

+ \caption{Screenshot of the second test application. Two polygons can be

|

|

|

+ dragged, rotated and scaled. Separate groups of fingers are recognized as

|

|

|

+ hands, each hand is drawn as a centroid with a line to each finger.}

|

|

|

+ \label{fig:testapp}

|

|

|

+\end{figure}

|

|

|

|

|

|

-% TODO

|

|

|

-\emph{TODO: Tekortkomingen aangeven die naar voren komen uit de tests}

|

|

|

+To manage the propagation of events used for transformations, the applications

|

|

|

+arranges its event areas in a tree structure as described in section

|

|

|

+\ref{sec:tree}. Each transformable event area has its own ``transformation

|

|

|

+tracker'', which stops the propagation of events used for transformation

|

|

|

+gestures. Because the propagation of these events is stopped, overlapping

|

|

|

+polygons do not cause a problem. Figure \ref{fig:testappdiagram} shows the tree

|

|

|

+structure used by the application.

|

|

|

+

|

|

|

+Note that the overlay event area, though covering the whole screen surface, is

|

|

|

+not the root event area. The overlay event area is placed on top of the

|

|

|

+application window (being a rightmost sibling of the application window event

|

|

|

+area in the tree). This is necessary, because the transformation trackers stop

|

|

|

+event propagation. The hand tracker needs to capture all events to be able to

|

|

|

+give an accurate representations of all fingers touching the screen Therefore,

|

|

|

+the overlay should delegate events to the hand tracker before they are stopped

|

|

|

+by a transformation tracker. Placing the overlay over the application window

|

|

|

+forces the screen event area to delegate events to the overlay event area

|

|

|

+first.

|

|

|

+

|

|

|

+\testappdiagram

|

|

|

+

|

|

|

+%\section{Discussion}

|

|

|

+%

|

|

|

+%\emph{TODO: Tekortkomingen aangeven die naar voren komen uit de tests}

|

|

|

|

|

|

% Verschillende apparaten/drivers geven een ander soort primitieve events af.

|

|

|

% Een vertaling van deze device-specifieke events naar een algemeen formaat van

|